Rechtin on Ultraquality - Establishing Relative Risks

Failure Modes and Effects Analysis (FMEA)

Excerpted from Systems Architecting, Creating and Building Complex Systems, Eberhardt Rechtin, Prentice Hall, 1991.

TECHNICAL RESPONSE II: ESTABLISHING RELATIVE RISKS

Specifying absolute risk levels for ultraquality systems ahead of time is, of course, just as impractical as certifying ultraquality for acceptance later on. In neither case, can the data be obtained by test or measurement. The specification becomes meaningless. Reliance, instead, defaults to counting on the presumed excellence of the systems team to deliver. Experience shows that approach to be dangerous. All problems, discrepancies, and anomalies will tend to be treated alike. Great effort may be expended where it is not needed. Too little effort will go where the risks are the highest. The result in more than one major program was a thousand—all presumably low-possibilities for catastrophic failure. When the system proved to be less than ultraquality, it was hard to know where to start fixing it.

But if absolute risks cannot be estimated, it nonetheless may be possible to estimate relative ones. Is this element more or less likely to fail than another one, for example, does it have more parts or require tighter tolerances?

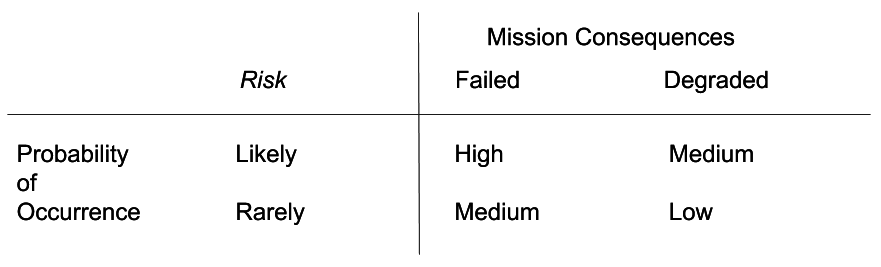

One of the techniques for answering such questions is failure modes and effects analysis (FMEA). The proposed architecture is laid out and the following question is asked about each component: What can fail, under what circumstances, with what relative risk, and with what consequences? FMEA is a tedious process. But it asks the right questions and forces consideration of every answer. It highlights single-point failures, those single failures that mean catastrophe. Ideally, all single-point failures would be eliminated by design. If not, the designer must be prepared to show why not. If accepted, some assessment of relative risk must be made, as defined by Figure 8-2.

Risk diagrams of this sort are clearly judgmental, but they accomplish the important purpose of distinguishing the critical from the merely important. If a failure mode is in the upper left box, it better be eliminated entirely. If in the lower right box, there are straightforward, less consequential choices to be made—fix now, fix later, accept the risk, etc. The difficult decisions are in the medium-risk boxes, and involve judgments of practicality of any fix, costs in money and time, and the likely consequences of the specific action being contemplated.

The test of a good architect, or of a good project manager, is the ability to put components and elements into the right risk boxes, after which the available resources can be most effectively allocated.

Presentations of risk assessments are a regular agenda item in launch-readiness reviews of Air Force space systems. Typically, there are no high-risk items, that is, items likely to occur that would fail the mission. The review would not be held until they were eliminated. But there are often a handful of medium risks and tens to hundreds of low risks. The low risks are usually accepted if both the systems people and the independent reviewers agree with the low-risk designation. The decision process for the medium-risk items can take weeks of presentations, discussions, experiments, and analyses. The review is risk management of the highest order. It is easy enough to decide no when everyone else so recommends. It is much harder to decide yes when everyone else says no. Yet, under national priorities to put critical space systems into immediate operation, the stakes are too high to take the easy way out. Such was the

Figure 8-2. Relative risk diagram.

case for the developmental U.S. ASAT (air-launched antisatellite) missile on September 13, 1985. It was a 50:50 risk, but the outcome was favorable. But for an equally urgent Titan 34-D, for which the consensus and decision was yes, a previously undetected manufacturing-process flaw resulted in an explosion just after launch.

Example:

An urgent launch was required of a critical satellite because an in-orbit satellite was degrading rapidly at the end of life. A medium-risk failure mode (a component of the type used in the satellite had failed in another application) was reported at the mission-readiness review. Launch was postponed until the applicability of the failure to the satellite could be determined.

Example:

Just before launch, a spacecraft experienced a component failure. The system architecture was such that the component was redundant and that work-arounds could be accomplished should its replacement fail (possibly due to the same as yet unknown cause). The spacecraft was launched and operated successfully.

Therefore,

If being absolute is impossible in estimating system risks, then be relative.

You might also be interested in these articles...

Lean Agile Architecture and Development