Rechtin on Ultraquality - Continuing Reassessment

Continuous Reflection and Problems that Arise Along the Journey

Excerpted from Systems Architecting, Creating and Building Complex Systems, Eberhardt Rechtin, Prentice Hall, 1991.

ARCHITECTURAL RESPONSE III: CONTINUING REASSESSMENT

One of the distinguishing traits of a good architect, as noted in Part One, is to pause and reflect as the conceptual phase comes to a close, reassessing the proposed system model before advising the client that it is acceptable to move ahead. It is a trait that can be well used in striving for ultraquality systems. the same way that builders improve a system by progressive redesign, the architect can improve it by continuing reassessment as the project proceeds.

Despite the best efforts to produce an ultraquality conceptual model, obstacles will arise later that will seem to thwart reaching the goal. A route will have to be found that avoids, goes around, or surmounts each obstacle. Like a pilot bringing a major ship into port, the architect must keep assessing the situation and be able to recommend a change of course to reach the desired destination-a credible ultraquality system.

*Can we still make it? Is the planned route still the right one? Are we running into more and more serious trouble going this way? Is there an alternate destination that is still satisfactory? *

And, underlying all of these, the continuing question:

Is the system inherently capable of ultraquality?

Problem 1: The Unrecognized Architectural Flaw

Of the many obstacles to reaching ultraquality, the most serious is an unrecognized architectural flaw—a system structure that will fail even if all the elements perform as assumed, yet not detected until late in development. The only preventive is repeated and, if necessary, independent critiques.

Example:

For multiengine aircraft and launch vehicles, can the mission be accomplished if an engine fails? Any engine? If not, then the architecture may be less reliable than one using a single engine.

Example:

Launching a payload into orbit with rocket engines is a matter of imparting sufficient kinetic energy to stay in orbit. That energy, to a first approximation, can be delivered either somewhat faster or slower than it is delivered on the nominal launch trajectory. Thus, if the launch-vehicle engines underdeliver in thrust due to a somewhat lower than normal rate of consumption of propellants, orbit may still be achieved, though at a later time. A recent launch vehicle contained a propellant-shutoff timer whose purpose was to preclude an uncontrolled thrust termination in case the guidance system failed to act. The timer was set for a few seconds after the nominal time. Unfortunately, the vehicle produced significantly less thrust than normal, the timer shut down the engines, and the vehicle failed to reach orbit. To preclude a relatively minor occurrence, a major failure was made possible.

Example:

One of the critical elements of an aerospace vehicle is the computerized attitude-control system. In a proposed design, three identical computers were to be used in parallel, with majority vote used to choose the presumably correct output. But there was a concern that the identical software of all the units might contain errors. Therefore, another computer was employed using different and simpler software to run in parallel with the first three as a check on their majority-vote output. Question for the reader: Is the design robust? Hint: What happens when the fourth computer output differs from that of the other three?

Example:

A well-established established communications security technique is to encrypt transmissions with a bit-stream code code known to both sending and receiving parties. However, the codes must ged from time to time, leading to a code-distribution problem. One proposal was to send two simultaneous transmissions on different channels. One transmission was the encrypted signal and the other the bit-stream code alone. For all practical purposes, each transmission was unbreakable. Comparing the two transmissions would then yield the original signal. Question for the reader: Why is the system not secure although the individual transmissions were?

Example:

Passenger and freight carriers seldom use fleets of a single vehicle. Manufacturers of fleet vehicles seldom make only one size or type of vehicle. Most fleets have vehicle with a geometric (multiplicative) relationship in sizes and weights. The same construct is found in telecommunications common carriers in multiplexed channels, alternate routes and different communications media. An FMEA of the fleet would show the reason: a single vehicle type is a single-point failure of the fleet.

Problem 2: Excessive Internal Complexity

One of the most powerful techniques for reaching ultraquality is to keep attacking the quality-complexity relationship at its source-complexity. As Art Raymond puts it in a variation on KISS, "Simplify. Simplify. Simplify." Architects and clients alike would agree. It is just common sense to keep the conceptual model as simple as possible while still satisfying the client's needs.

But a too-simple conceptual model can hide underlying complexity that can put system quality at risk. Complexity is a breeding ground for errors. But it grows in a quite understandable way. One of the best partitioning heuristics, minimum communications, by calling for minimal external complexity for each element, can drive complexity inward into the elements. As each element is further partitioned, the system can become still more complex—and less likely to reach ultraquality. The question needs to be continually raised: Is the system becoming increasingly complex as we move into design, manufacture, and operations?

Are we fighting component deficiencies with complex fault-tolerant designs? Are we forcing software down to the assembly-language level? Are we demanding extraordinary precision or unprecedented cleanliness in manufacturing? Is documentation being burdensome?

A stubborn tendency for internal complexity to grow, in fact, may be traceable to a prior system decision, modification of which could reverse the trend. The choose watch choose heuristic provides a useful strategy here. If complexity keeps rising faster than simplification can reduce it, backtrack to the cause and eliminate it

Problem 3: The Formulation of Ultraquality Objectives

The way in which ultraquality is expressed, the parameters that are *** ultraquality performance, will determine not only the development route to be followed, but also the obstacles that will be encountered. Some objectives may be virtually impossible to reach, whereas others, only slightly different, may be eminently practical. Some may be practical enough, but in achieving them, undesirable side effects may become apparent. A proper question to ask when ultraquality seems beyond reach is: Are the stated quality objectives still the right ones?

One might think that a requirement for high reliability, for example, would be easy to specify. But consider some of the ways communications reliability might be defined. A 0.01% failure rate could mean one 1 hour outage per year, or 10 seconds once a day, or a barely audible burst of static of one-tenth millisecond every second. Depending upon the particular communications sering provided – voice, electronic funds transfer, facsimile, etc.—the most important factor might be the mean time to recover (MTTR), the mean time between failures (MTBF), or even the time of day when ultraquality was essential.

To satisfy different statements of ultraquality, some architectures require ultraquality components, whereas others can use easy maintainability and repair. If cost were crucial, some architectures might aim for a minimum spares inventory, centrally located; others might opt for a distributed inventory of selected parts.

Example:

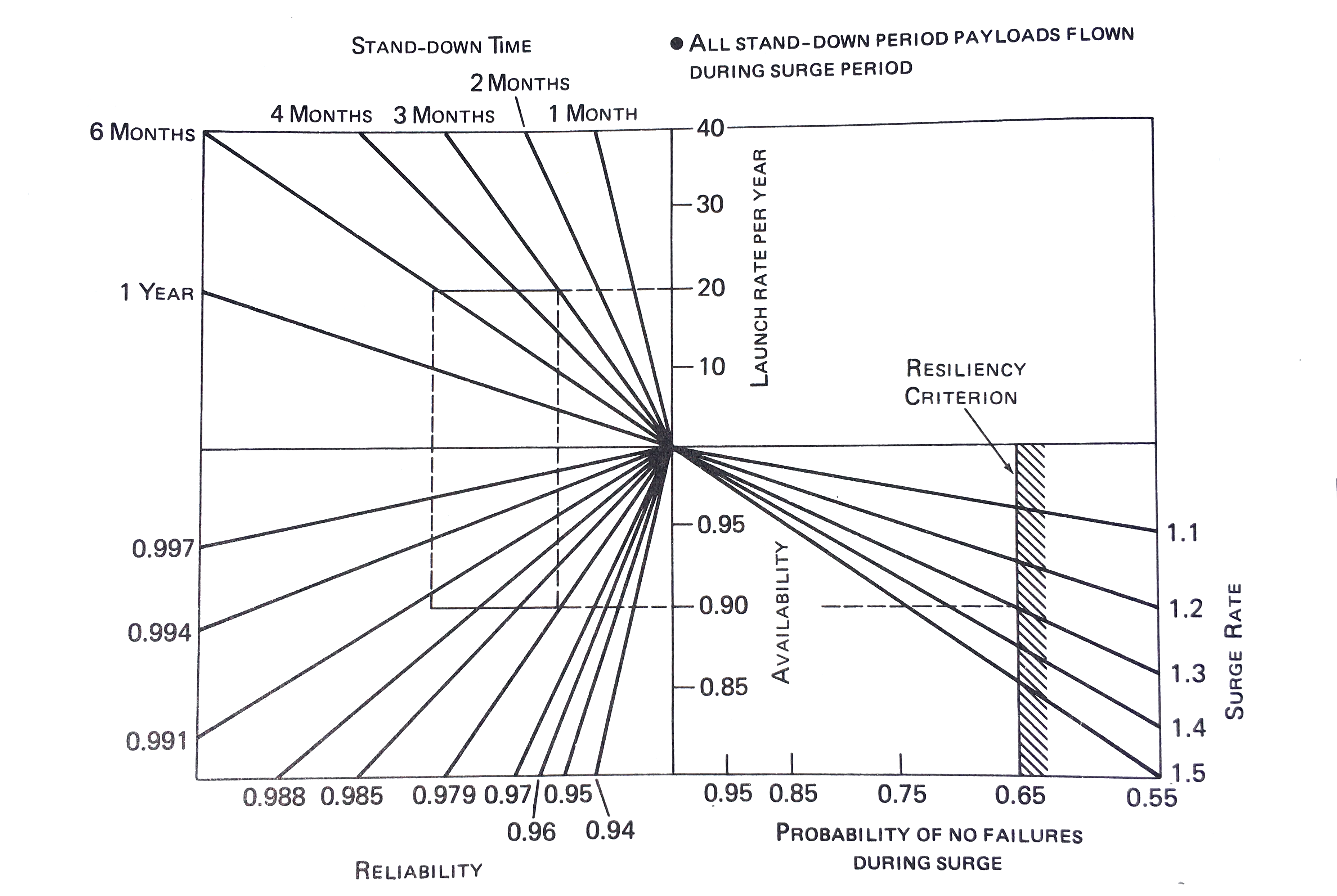

There is a U.S. national policy calling for assured access to space. On closer inspection, assurance depends upon the launch rate per year, vehicle failure rate, stand-down time after failure, probability of no failures while flying off the backlog, and the peak-to-average launch rate. It is not only the failure rate that counts, it is also what happens after a failure. Can the space transportation system recover well or will it collapse? In brief, assured access for fleet-design purposes is best stated as resiliency to failure, a complex parameter combining all of the ones before. The trade-offs among them are shown in Figure 8-4 (Bernstein 1987).

Figure 8-4. Resiliency to failure (Source: Abbott and Bernstein, "Space Transportation Architecture Resiliency," The Aerospace Corporation 1987)

The resiliency to failure graph is a good example of a trade-off among a combination of subordinate objectives that retains the same overall objective. In it, resiliency can be maintained by different combinations of system reliability, stand-down time, and surge rate. If the former is less than expected, then stand-down time needs to be less and surge rate greater, with different combinations yielding different costs. By analyzing them, one could determine the cost effectiveness of greater reliability or the cost risks of not meeting specifications.

The usual presumption is that objectives are formulated and prioritized during concept development, reassessed at the end of it, and passed as firm requirements to system engineering. Having constant objectives and firm requirements are certainly ways of maintaining system integrity. But there is an architectural tension here, particularly for ultraquality systems. The fact that the conceptual model appears to be ultraquality is no guarantee that the system, once built, will be. Unexpected technical, budgetary, and financial crises will occur. The objectives as originally formulated may no longer be achievable. The constant objectives and firm requirements may have to be revised.

The architect, once again, will be at the center, trying to achieve a compromise of the extremes and a balance of the tensions, trying to maintain system integrity during potentially chaotic change.

Ultraquality imperatives make the architect's problem particularly difficult—there is almost no margin for error. Reformulating objectives can be mandatory on the one hand and deadly on the other. There is nothing fundamentally wrong with revising objectives, even very late in the project, in the interest of reaching a practical, but still satisfactory, solution. Objectives are revised remarkably often in operational military systems once tested in combat; a practical answer may come from new tactics or use against different targets or counterweapons. A commercial manufacturer, highly responsive to market demands, may redesign and remarket a product in the middle of a production run.

Even so, unless well-understood options have been provided for the system itself to change, changing objectives may not help. Given the difficulty of achieving ultraquality in any case, retaining options as long as possible can be particularly important for ultraquality systems.

Problem 4: Reinforcement

There remains one more important reason for continuing reassessment of lity objectives - making certain that they are repeatedly reinforced. Initial objectives can be easily forgotten. Other worthwhile but lesser objectives will intrude from time to time. Minor obstacles must not be overcome by major changes in objectives, otherwise, the fine edge that it takes to achieve ultraquality may be lost. It only takes one error to do it. It may seem tedious and repetitious for the architect to keep readdressing it, but the question should never go away:

Are we still meeting our system objective of ultraquality?

If not, the game may be over.

You might also be interested in these articles...

Lean Agile Architecture and Development